I started working on a big project with procedural world generation, think 2D Minecraft or Terraria… and one of the first things i noticed was the awful performance.

Bad performance is nothing new to me and i usually find a way around it, but this project is different, i can simply cut content off or rout around it, there needs to a lot going on in the scene…

Hence i decided to get to the bottom of what drives performance and how some stuff actually works.

- Any and all knowledge about technical limitations or how to improve performance in the engine is more then welcomed!!!

THE TESTING GROUNDS

My new game will be all about world generation, so ill be testing in a similar manner to test the limits.

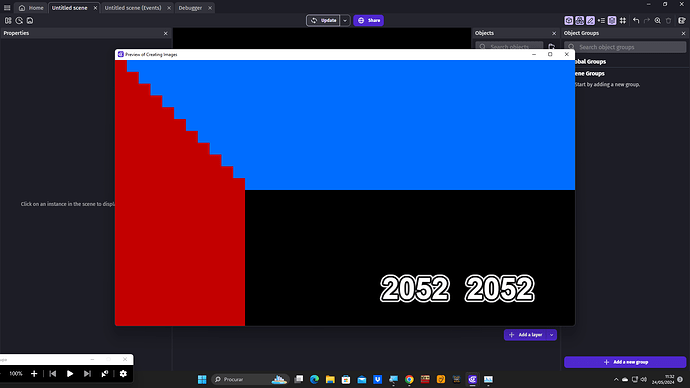

I created a project with where at the beginning of the scene it starts generating a 500x500 world made of 2 Tiles, one Red and one Blue.

The world is being generated in 2 halfs to speed things up, one half is Red the other Blue.

I also have a 2 counters to keep track of how many of each tile has been generated to know how many objects there are in the scene at any time.

The Scene Camera is 1280x720 and the Tiles being used are 32x32 as standard, if any of this changes ill say it.

Base Performance with no Events (Other than worlds generations)

- 2000 Red 2000 Blue 7.00ms mostly coming from the Render at 3.50ms and other small things, the actual events where at 0.80ms

Bellow is what the screen looks like… most of the Tiles are off screen, this is intentional to see the difference between on and off screen, or how the total of object on scene affects performance.

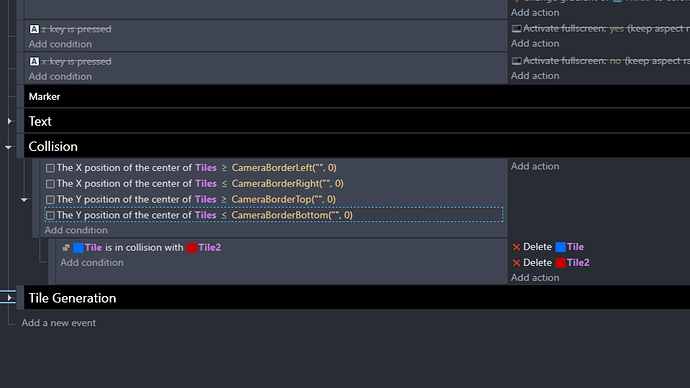

Testing for Collision between Red and Blue

I made a collision check event, and if Red and Blue where colliding, then those tiles would get deleted.

No on screen restrictions:

- 600 Red 600 Blue 37.00ms Render 0.87ms

- 1000 Red 1000 Blue 150.00ms Render 1.00ms

- 1500 Red 1500 Blue 374.00ms Render 1.20ms

- 99% of latency is coming from the “Collision” event

On screen restrictions (Only tiles on screen get tested for collision)

- 1500 Red 1500 Blue 10.00ms Render 1.00ms

- 7.50ms comes from the “Collision” event

This goes to show that you should always limit your events to “On screen” only! …and doing so i quite easy, bellow you can see how i do it.

DO NOT USE THE “InOnScreen” Behavior, that thing is absurdly expensive in terms of performance, use my method instead, it barely costs any latency.

- This test also applies to anything else that “Checks” an object, like for example “Distance between 2 Objects”, even the tiles off screen will be tested against and if you have a lot of them… then say good bye to performance.

Keep any and all “Checks” to screen only unless under specific situations that you might need to adjust to… like checking how far from the end level flag you are, or from an out of screen checkpoint… other than that, stick to on screen.

Testing if theres a difference between Showing, Hidden and Transparent Objects.

Render results

- BASE No tiles, just a black screen with the text counters… Average of 1.90ms

Latency at 3000 total Tiles, in and off screen

- Average of 3.41ms Showing

- Average of 2.12ms Hidden

- Average of 3.20msTransparent

Now this is interesting… Hidden objects dont seem to get rendered while transparent ones do… Think were making some progress

A pixel is a pixel, lets test different object sizes within the same filled up camera

- Using a 1280x720 resolution, and entire screen filled with 32x32 Objects, lined up in a grid, take an average of 8.00ms in total, 4.50ms coming from the render alone.

The only other thing on screen is the tile counter, ill leave these in for consistancy.

Now lets Zoom the camera in to 2x, essentially making object 64x64, still filling up the screen completely.

The tile counters are still on screen, but not zoomed, they are in their own layer.

- Using the same resolution, and having every single pixel covered, the latency droped to 6.00ms with 3.00ms coming from the render!

Seems that by zooming in, the pixels on screen arent the same. I was under the impression that by zooming in, things got “scaled up”, but thats not the case, the pixels just get bigger… and thats a big deal!

Wonder what happens if we use bigger sprites… lets go up to 64x64!

-

With 64x64 Tiles and no Zoom the total was 5.50ms and the render 2.10ms

-

With the x2 Zoom using 64x64 Tiles the total was 4.65ms render 1.45ms

THIS IS MASSIVE! A pixel is NOT a pixel, bigger objects take less rendering than smaller objects, and then using the camera zoom, we can improve our performance even more!!

To me, logically, this makes no sense… a pixel on your screen should take the same performance, but it dosent, using bigger object is way better for performance…

Wonder if its because of the collision mask… lets test!!

Lets use this as a base:

- 64x64 Tiles and no Zoom the total was 5.50ms and the render 2.10ms

Ill make the collision mask 32x32 and then test performance again…

- Nope… Collision masks have no performance impact, exact same results, even tho the mask was half the size.

No idea why bigger object perform better then… maybe its something to do with the engine having to calculate the actual number of objects and not what their made of.

Essentially… The less objects the better, but if youll be using a lot of them, better to make them big!

Just because i can… Lets see what one single, screen wide object does for performance.

- A single screen wide 1280x720 Object, took a total of 1.80ms and for the Render 1.50ms

This further shows that a pixel is not a pixel in GDevelop

So far my conclusion…

- Keep any and all logic to On Screen only

- Use as little objects as possible

- Fill in the gaps with big Sprites

- Having less Sprites but Bigger is better than More but Smaller

- Use Camera Zoom if possible to increase performance

Trough all this the CPU, GPU and RAM barely get used.

If i think of any more tests to do with this ill post them here!