How do I…

I am trying to do a timed bar that fills in x seconds after the player takes action.

What is the expected result

I am using a tiled object as the bar and normalizing the size to 70 pixels. I have the expected time (e.g 10 seconds) and the elapsed time. So basically, the size of the tiled object is:

70 * elapsed time / total time

The only variable is the “elapsed time”. I have tried it being a timer, or a variable that updates every frame as follows => elapsed time = elapsed time + TimeDelta()

The expected result is that, no matter the frame rate (that is 1/TimeDelta()) the bar will fill in the total time (e.g. 10 seconds)

What is the actual result

The action works as intended ONLY for FPS below 60FPS. When FPS is higher (e.g, 90FPS) the time is not 10, but 15 seconds. The in game timers and all the others will show as if 10 seconds elapsed, but measuring using a real-life chronometer will show 15 seconds. This is consistent with elapsed time being = elapsed time + 1/60… (but it should be 1/90). All in all, it feels like the engine had a 60FPS threshold.

Is there any explaination for this behavior? What is wrong with this?

Note: if I use a fixed number (like 1/60) instead of DeltaTime() in the formula, the opposite behavior happens, and the bar fills in 10 seconds for any time ABOVE 60FPS, but fails to perform at slower rates (e.g. taking 20 seconds to fill at 30FPS). While a possible solution could be to check if FPS is above or below 60, and fix the variable accordingly, I wonder if there is a general solution and if I am doing something wrong or missing something.

Related screenshots

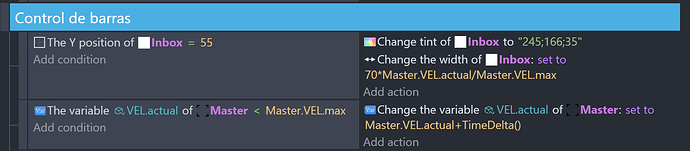

This is the screenshot of the related events. These are only two that use the variables and/or the bar object.